The How’s My Cow project, was an InnovateUK funded project between The Centre for Machine Vision at UWE and Kingshay Dairy Consultants. It focused on the development of a non-intrusive herd-monitoring system that would use 3D computer vision to provide estimates of cow condition as the herd passes through a controlled corridor.

Key Technologies

C++ | OpenCV | CUDA | OpenNI | Hardware

How Did It Work?

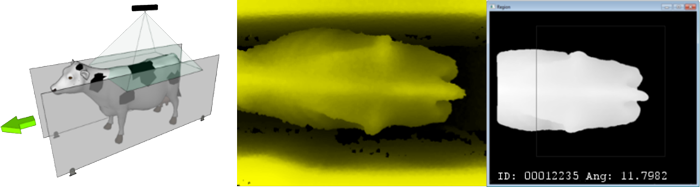

- A structured-light depth sensor was placed above a corridor, through which the cattle would travel en-route to the milking parlour.

- Each cow wore an RFID tag, which provided a way to identify specific animals and register measurements against them over time.

- The depth-map from the 3D camera was analysed to produce an estimate of the angularity of key points of the cows anatomy, namely around the hooks and pins.

- This angularity measurement was then correlated against ground-truth body-condition scores, using multiple measurements from different trained individuals to reduce bias.

- The whole system was self-contained in an IP66 box, with a separate IP66 camera module and was connected over ethernet to a central server.

- Calculations were performed on an nVidia GPU to ensure that we could capture and analyse data in real-time as the cattle pass beneath the sensor.

[1] M. F. Hansen, M. L. Smith, L. Smith, I. J. Hales and D. Forbes (2015). “Non-intrusive automated measurement of dairy cow body condition using 3D video”. In Proceedings of the Machine Vision of Animals and their Behaviour (MVAB), pages 1.1-1.8. BMVA Press, September 2015.