ForensicMesh was a low-TRL research demonstrator, funded by Boeing and carried out in collaboration with the Visual Representation Group at Cambridge University. The goal was to assist human operators to define the narrative of a scene as it unfolds in geo-tagged body-worn video footage, by embedding that data into a parsimonious model of the world.

Key Technologies

GoDot | Java | DSO/SLAM

How Did it Work?

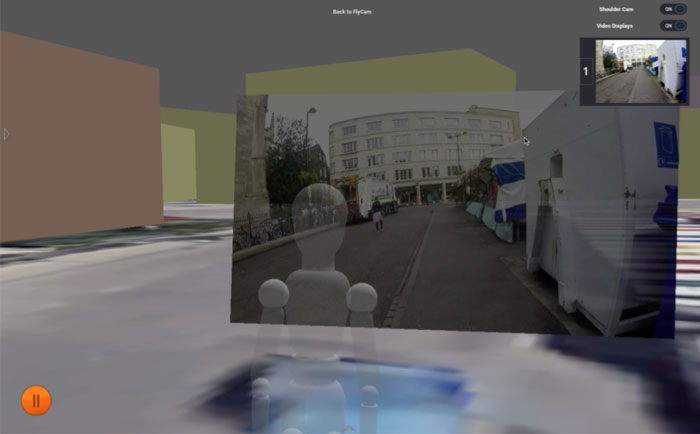

- The hypothesis was that by using a simplified model we would reduce distraction while providing just enough context to assist the user’s navigation through the world.

- Video trajectories for body-worn video were produced using Direct Sparse Odometry, and rectified into world-relative positions, to produce geotemporal-tagged video feeds.

- The parsimonious (simplified) scene model was generated from open-source aerial LiDAR mapping data of Cambridge City Centre.

- Avatars were driven through the model using the trajectories, with the view of the body-worn footage provided in front of them.

- The user could control a fly-cam to view the wider context and all body-worn recordings, or they could view from an avatar’s simulated view-point.

This work was published in the paper “Computer says ‘don’t know’ – interacting visually with incomplete AI models“. All information in this post is publicly available within that paper.