MekaMon is a quadrupedal consumer robot created by Reach Robotics, which was released in Apple Stores, Amazon and others. The robot is controlled by a companion app, which was compatible with both Android and iOS devices. The app included a number of augmented reality game modes, embedding virtual game elements around the physical MekaMon.

Key Technologies

Unity | C# | OpenCV | ARKit | ARCore | ARFoundation | Python | Tensorflow

How Did It Work?

Detection and Tracking

- We need to know where the MekaMon is to interact with it.

- Tracking is fairly easy once we have a confident initial location.

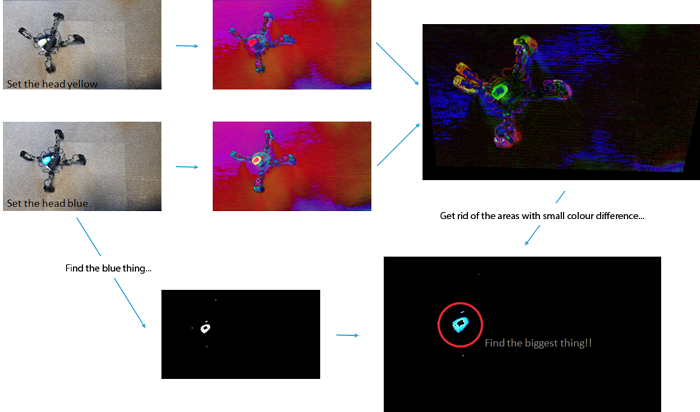

- Flash the head and look for the difference in colour.

- Images from different views are aligned using back-projection, since we know the camera’s location in the world from odometry (AR Foundation / ARKit / ARCore).

- Tracking then involves “following the blue thing”, with some classification to check the position and shape of the object is correct.

Smarter Detection: Cascades

- Compared LBP and Haar Cascades with boosted classifiers to detect MekaMon body

- Worked well if bootstrapped with rough location from previous frame.

- Unreliable in cluttered scenes.

Segmentation: Investigating Mask-RCNN for AR

- Head tracking only provides coarse location, fine for basic interaction, but not enough for immersive scenes (e.g. occlusions, etc.).

- Researched into deep-learning approaches for segmentation of MekaMon in AR scenes.

- Generated a large, varied simulated dataset (~40k images) using Unity.

- Also produced a much smaller, hand-labelled dataset (~115 images).

- Initial investigation uses Mask-RCNN, ResNet backbone, transfer learnt on simulated data and fine-tuned on hand labelled data.

- Results were promising, although slow (1 FPS on a desktop).

- Still TODO: Investigate a quantised MobileNet backbone on TF-Lite.